In one of my previous posts, Is Bitlooker from Veeam a game-changer?, I wrote about the benefits of using Bitlooker for backup jobs when using Veeam Backup & Replication v9.x however Bitlooker is a feature that is not only available for backup jobs – you can use it for replication jobs as well.

So I thought it’d be fun to see what difference, if any, it makes. The goal of my tests is to figure out the most effective way of copying/replicating a VM from one host to another.

The set up for the test:

A virtual machine is installed with Windows Server 2016 standard edition. 100 GB disk assigned to the VM, thin provisioned. The disk is then filled with files (a bunch of iso-files of different sizes). That’s the baseline, then roughly 85 GB will be deleted (all the added iso-files) – then trashcan will be emptied. So we’ll have some blocks containing stale/old data, the blocks are marked as available to be reused from the operating system point of view but they haven’t been zeroed out so from any hypervisor (outside the VM) it just looks as any other block containing data.

Operating system installed (Windows Server 2016) and updated. The VM now consumes 13,5 GB worth of storage.

Then a bunch of files were added (almost) filling the entire disk.

From the vSphere side of it:

At this point the just added files were removed and trashcan emptied.

And from vSphere:

Now the command ‘ls’ will not show the actual size, so ‘du’ can be used instead to see the actual size of the vmdk file:

I’m going to test 4 different scenarios:

- Migrate the VM from one host to another offline (VM will be shutdown).

- Replicating the virtual machine with VMware vSphere Replication 6.5.

- Replicating the virtual machine with Veeam Backup & Replication without Bitlooker enabled.

- Replicating the virtual machine with Veeam Bitlooker enabled.

The thesis, or point to prove, is that test 1-3 will have no or little impact on the size of the vmdk file however – magic will happen on test 4. So lets perform the tests and find out for real!

Test 1:

VM moved to another host while offline and now let’s explore what can be seen using different methods.

Inside the VM:

From the host:

So no change in vmdk file size as expected.

Test 2:

The virtual machine will be replicated to another host using VMware vSphere Replication 6.5.

VMware vSphere has been configured using the following settings:

Not alot of settings, in fact, the above settings will have no impact on the vmdk size. They will only have control how the snapshot on the VM will be generated (crash consistent vs consistent backup) and the impact the replication job will have on the network.

Inside the VM:

Since ‘ls’ doesn’t show the actual size on a thin disk, disk usage ‘du’ is used instead:

So no change in vmdk file size as expected.

Test 3:

The virtual machine is replicated to another host using Veeam Backup & Replication v9.5.

Replication from a Veeam perspective has been set up, to make a fair comparison to the VMware replication test (test 2), the Veeam job will not use exclude swap file blocks:

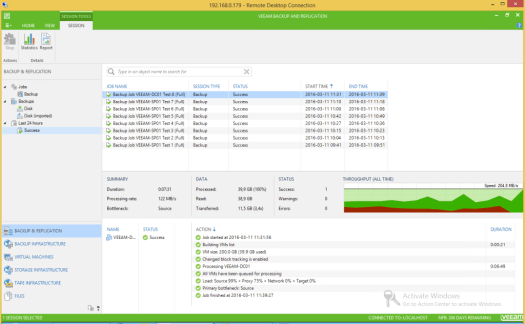

Processed and read data in the picture below tells us that Veeam doesn’t know the difference between blocks in use and blocks marked as deleted (the same applies for almost all backup vendors):

Inside the VM:

From the host:

And using ‘du’:

So no change in vmdk file size as expected.

Test 4:

Know time for the fun stuff. The virtual machine will be replicated to another host using Veeam Backup & Replication v9.5. We will use both space saving techniques we can enable on the job (with application-aware processing we can also exclude specific files, folders, file extensions but we’re not using that feature in this test)

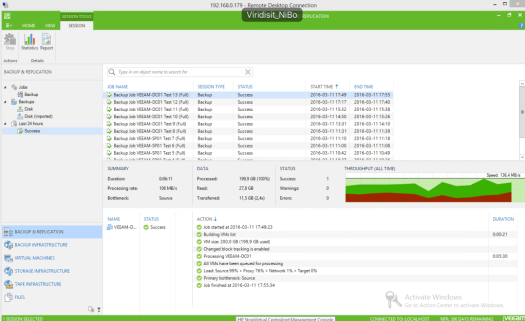

Now, this is the magic we were looking for! The proxy server has processed all of the data but it has only read data that contain used blocks!

From the VM:

From the host:

Now the vSphere web client combine the .vmdk and -flat.vmdk file into one (like it’s done forever):

And the disk usage utility shows:

Yikes! That’s cool stuff!

Conclusion:

Bitlooker is a feature you should have enabled on any relevant job. It certainly can be used to reclaim that precious storage space you so desperately need. Heck, why no use it as part of your normal failover testing, cause you’ll already doing that right? Once a month (or how often you feel appropriate) do a planned failover using Veeam Backup & Replication, verify that you DR plan works and as an added bonus you reclaim disk space in the process!

And yet another benefit is the spent replicating the virtual machine, without Bitlooker it took 30 minutes to replicate the VM from one host to another but it was just shy of 7 minutes with Bitlooker enabled.

So seriously, why are you not using this magic thing? There’s only one drawback, Bitlooker supports only NTFS file system (=Windows VMs).

You must be logged in to post a comment.