Disclaimer: This is not a performance comparison or test in any way, shape or form, it is merely my experience in a lab environment supposed to show an indicative relative difference in performance at a high level. OK, you get the picture?

So as you may know Veeam introduced a new feature in version 9 of Backup & Replication and I thought it would be fun to see if there were any actual benefits of using the feature called “Bitlooker” by doing some basic tests. The goal of my test was to see if there were any differences in running backup jobs with or without backing up dirty blocks (i.e blocks that contains data but has been marked as deleted and thus the blocks can be reused by the operating system) which is the actual functionality of Bitlooker. My starting position was that logically it makes sense of course that it would be more efficient in a lot of ways using Bitlooker but I wanted to see if it was quantifiable: difference in speed and/or backup file size – again at a high level. And as an extended test I also wanted to see if there was any notable difference between using Bitlooker and SDelete (a tool from Sysinternals that you run inside the guest OS to clean up dirty blocks by writing zeros to those blocks).

Description of the Test environment

A Veeam Backup & Replication environment was set up, using simple deployment (all services on a single VM). A virtual machine running Windows Server 2012 was set up as the VM to be backed up running on a vSphere 5.5 host. Anyone familiar with the VMCE lab environment, that’s basically what I was using with and extended drive on on one of the domain controllers. The domain controller was assigned a 200 GB thin disk. I filled the disk with 190 GB worth of data, deleted 160 GB of data and then went ahead with some comparison tests. To describe what actually happens inside the OS at this point, only pointers to blocks containing information is deleted not the actual data on the blocks themselves which means that the OS will know the actual consumption on disk but for an outside observer (vSphere and Veeam for instance) it makes no difference: a block containing data is a block containing data.

Since I’m running the tests on my brand new toy, a Gen 6 Intel NUC which is not an enterprise server, I’m not that interested in the actual performance figures but rather the relative difference between the different test scenarios – all else equal. Oh, but there’s just one thing: Bitlooker only works with Windows and NTFS filesystem.

I initially performed some tests on a 40 GB VM but felt that, even though I saw some great improvements, I couldn’t draw any actual conclusions based on them.

I quickly realized – “We’re gonna need a bigger boat!” and went for the 200 GB drive instead.

Since I already had performed some initial tests on my 40 GB environment the relevant scenarios at this point started at Test 8. All backup jobs were set up to take active full backup of the VM:

Test 8: A 200 GB vmdk where approx 40 GB was consumed. Basic backup test without Bitlooker enabled.

The resulting vbk-files from the 200 GB vmdk containing 40 GB of data is 12 GB in size.

Test 9: A lot of files were added to the VM, roughly 150 GB worth of data. Now the total consumed size of the disk is 191 GB. Again a basic backup test without Bitlooker enabled.

A quick look shows the vmdk to be 200 GB in size.

With compression and deduplication the vbk-file ends up 95 GB in size.

Test 10: Now the fun begins, 163 GB of data was deleted and backup runs without Bitlooker.

Even though the files inside the OS has been deleted, the actual vmdk-file size doesn’t change and that’s expected behaviour from a thin disk. And it wouldn’t change even if you made a storage vMotion.

This is where it starts getting interesting, the vbk-file size is still the same size as we saw in the previous test: 95 GB! By logic it should be smaller since we’ve deleted 163 GB of data but clearly from the outside it doesn’t matter – a block is a block is a block.

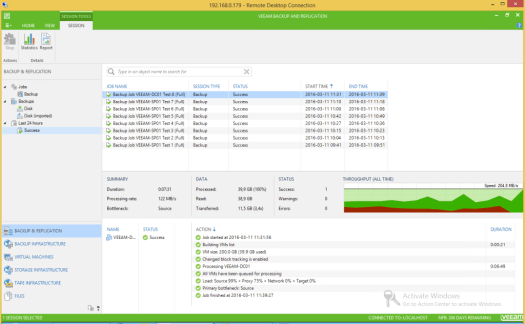

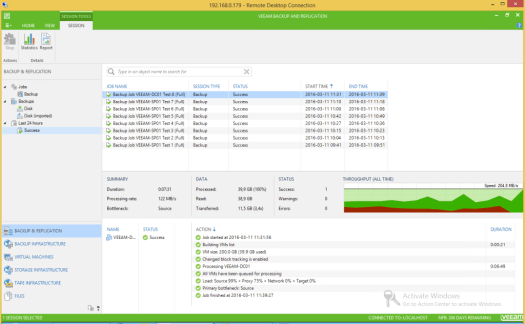

Test 11: This is where we put Bitlooker to the test, same scenario as Test 10 but Bitlooker has been enabled on the backup job. That’s the only difference, a tick in a box.

One big thing to highlight on the picture above is time spent backing up the virtual machine, 6 minutes compared almost 36 minutes in “Test 10”. That’s a huge difference, and the end result will still contain the exact same active data from the VM.

Massive reduction, we’re now seeing vbk-sizes similar to the first initial backup test in “Test 8”.

Test 12: So let’s see if all types of “zeroing”-techniques are equal. We’re now using SDelete to do it’s job on the C-drive zeroing out all deleted blocks and the backup job runs without Bitlooker.

It performs faster than “Test 10” but slower than “Test 11”, that’s interesting! I wasn’t really expecting such a big difference I must admit.

The backup file size is identical as “Test 10” though, and that was expected.

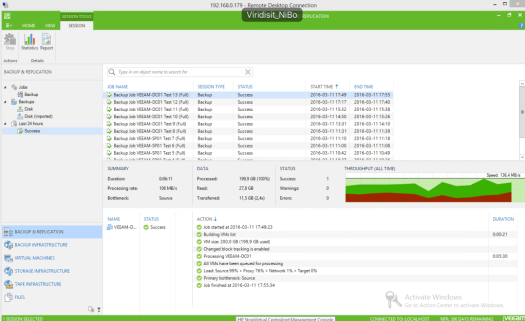

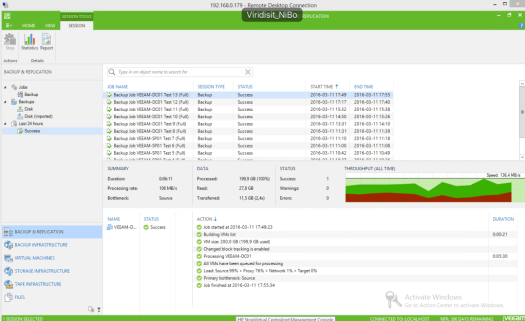

Test 13: So for fun let’s enable Bitlooker on the job, so SDelete and Bitlooker in conjunction.

Bitsocker comes in to play again reducing the backup time to 6 minutes.

No change in backup file size, that was expected. So there’s no benefit what so ever in this scenario of using SDelete.

Test 14 – Extra test: Since there was such a difference between “Test 12” and “Test 13” let’s verify the behaviour by disabling Bitlooker and run the job again. This should mean more time spent on the backup, right?

Yep, we’re back again pushing past 23 minutes for the job.

Backup file size doesn’t change.

Conclusion

Reducing the amount of data to backup from your virtual machines has always been a good thing, how ever historically it has been a manual or at least semi-manual task to accomplish, running SDelete.exe or some eqvivalent tool for instance. Perhaps running as a scheduled task but nonetheless it had to run on the VM itself and would require time and valuable CPU and I/O resoruces in the process. It was also prone to human “error” since someone had to actual run the command or set up a schedule for it – meaning it might be forgotten or missed setting up new VMs. It’s worth mentioning that SDelete is a few years old and that there are other tools available you can use, one of which is a fling from VMware called GuestReclaim but I couldn’t use it in this case since it doesn’t work under Windows Server 2012.

Bitlooker changes the game completely, you literally only have to tick a box on a backupjob to make use of it! Faster backup times and smaller backup files, are there any drawbacks? Well yes and no, if you’re a windows shop go nuts with it but if you’re running any other OS you simply won’t be able to use Bitlooker.

Bitlooker is a great new feature from Veeam, not only does it reduce storage space required to hold your backup files but it also reduces backup time significantly for your active full backups (to be clear it will also make a difference on your incremental backups). As I already mentioned the tests were done on a tiny lab environment but it can still act as an eye-opener in terms of

relative difference with or without bitlooker enabled. Your own milage may vary, but you will see improvements. I tested 1 VM just for fun but I bet you have a lot more VMs, do you think you will save time? I’m sure of it!

“So what about cost? How much do I need to fork out for this great new feature you speak of?” I hear you say. That’s almost the best part: Nothing! You don’t need to pay anything! As long as you have a valid license and support contract you can just upgrade, it’s included in version 9 of Backup & Replication and for all editions: Standard, Enterprise and Enterprise Plus. For all new jobs created it’s even enabled by default and it can easily be enabled on your existing jobs – just tick the box. Thank you Veeam!

I would recommend enabling this feature on all your backups if you haven’t done so already. Period.

So ending with answering my question in the subject: Yes! It really is.

Agent only use: 0-socket license required for enabling advanced funtionalities (Scale-Out Backup repository, Tape, WAN accelerator) in Backup & Replication when using agents if you’re not using Backup & Replication for backing up any virtual environment.

Agent only use: 0-socket license required for enabling advanced funtionalities (Scale-Out Backup repository, Tape, WAN accelerator) in Backup & Replication when using agents if you’re not using Backup & Replication for backing up any virtual environment.

You must be logged in to post a comment.